GenHelm

There are many factors that determine the quality of a website. These can broadly be divided into two categories:

Obviously, websites need to look nice and present useful and relevant information. This post focuses primarily on the internal characteristics of websites. We break down our checklist into the following functional areas:

This section covers the technical aspects of your site.

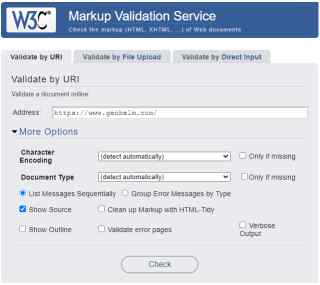

Today's browsers are very forgiving when it comes to rendering invalid HTML. Therefore, we should not assume that when pages of our site "look" correct, that they are, in fact, syntactically correct. The best way to check the syntax of a page is by using the W3C Validator. When using this tool, you will generally want to mark the Show Source option, as shown below, so that errors are shown within the context of the full HTML document.

If your site has a sandbox, you will normally perform all of your validation using the URL's of your sandbox so that you can easily retest your pages as you make corrections. You should correct all errors identified. Warnings and Info messages are not as critical but should be reviewed as well and corrected if possible.

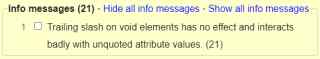

If you are validating a site built by GenHelm, you will most likely receive informational messages similar to the following:

This is because the supplied GenHelm models generate HTML that also validates as XML in case you ever want to analyse the HTML using an XML parser. These informational messages can be ignored.

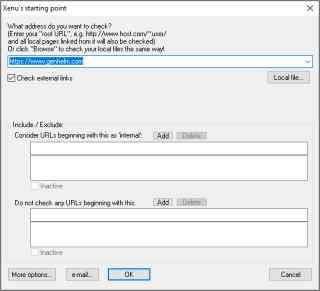

Xenu's Link Sleuth is an old utility but it still does a good job of analysing sites for broken links and identifying other quality problems. Simply download this tool and choose the Check URL option under the File menu to scan your entire site. It is a good idea to mark the option to Check external links as shown here.

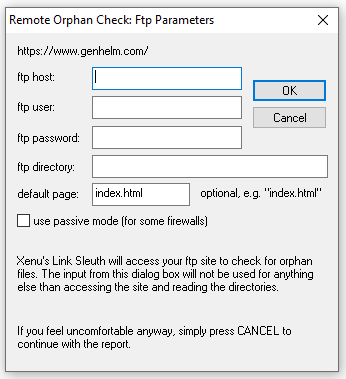

When Link Sleuth finishes scanning your site, you will be asked if you would like a report, click Yes.

On the next dialog, just click Cancel.

If you have access to your site's error log, it is a good idea to check this now since Xenu's Link Sleuth has loaded every page so we want to confirm that none of the pages have triggered server-side errors. Note that the absence of server log errors at this point does not give your site a clean bill of health since Xenu has only issued GET requests against your site's pages.

Review all of the broken links shown in the report and correct these. Note that some "broken" links may be false positives since Xenu does not comprehend certain types of links such as tel and sms anchors.

When reviewing links in Xenu, be sure to check links which have been redirected. In most cases, when you see 301 redirects you should change your site to refer to the target of the redirect in order to avoid the slight delay caused by the redirect operation.

Every site should have a robots.txt file. If you are running under the GenHelm framework, this is rendered automatically. Generally, your production robots.txt file should look something like this:

User-agent: *

Disallow:

sitemap: https://www.genhelm.com/sitemapindex.xml

In this case we want to allow all user agents and we don't want to disallow any pages. You should not use this file to list the URLs that you don't want to be indexed. This just invites hackers to try to access these pages. Instead you should set the robots metatag on the pages that you do not want to be indexed like so:

<meta name="robots" content="noindex,noarchive,nofollow">

If you have a separate sandbox site, this should have a separate robots.txt file that looks like this:

User-agent: *

Disallow: /

This will prevent all (legitimate) search engines from crawling the sandbox site. You never want your sandbox to be indexed since this could result in duplicate content penalties for your production site.

Notice that the production robots.txt file provides a sitemap url, which returns an XML file. Every site should have an XML sitemap. This is used to provide search engines with a list of all of the pages to be indexed within your site. If you have a very large site, say more than a few hundred pages, you may want to have several separate sitemaps. In this case, the sitemap referenced within robots.txt is actually an index of other XML sitemaps. Here is an example of a sitemap index which references two sitemaps:

<sitemapindex xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<sitemap>

<loc>https://www.genhelm.com/sitemap_main.xml</loc>

</sitemap>

<sitemap>

<loc>https://www.genhelm.com/blog_sitemap.xml?exclude_category_page=true&exclude_category_post_page=true&topic=genhelm</loc>

</sitemap>

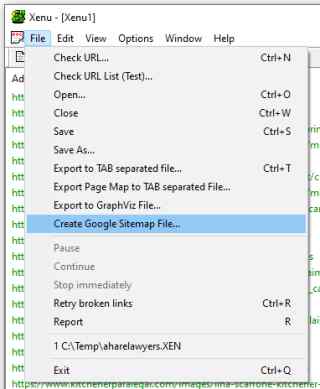

</sitemapindex>If your site is developed using GenHelm, XML sitemaps are generated automatically. If you don't yet have an XML sitemap, the Xenu Link Sleuth can create one for you. After crawling your site, choose the Create Google Sitemap File... under the File menu as shown.

You will be prompted for a location in which to save the generated XML sitemap. After saving it locally, you will need to upload it to your site making sure the name and location matches that which is referenced in robots.txt.

If you already have an XML sitemap, it is still a good idea to use Xenu to generate one. Then you can use a comparison tool like this one to compare your existing sitemap with the one generated by Xenu.

Your site should have a valid SSL certificate so that all pages can be served under the encrypted HTTPS protocol. These days, there is no reason not to install an SSL key since these can be obtained for free from services like Let's Encrypt. Furthermore, you should make sure that references to pages under HTTP are automatically redirected to the appropriate HTTPS page. Additionally, you should standardise the form of the URL you wish to serve to prevent URLs such genhelm.com from being served in addition to www.genhelm.com. Instead, URLs such as genhelm.com or genhelm.com/index.php?page=home should always be 301 redirected to https://www.genhelm.com.

It does not matter whether your standard URLs are the www version or the non-www version as long as there is exactly one URL format for each page of your site.

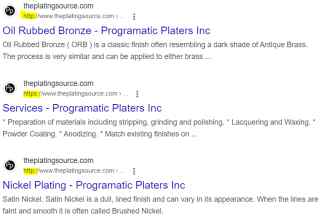

If you don't redirect pages and protocols correctly, search engines could index multiple URLs for the same content or your index could contain a mixture of protocols as we illustrate here using Google's site command:

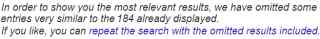

If you see a message like this when you search Google for

site:your-domain.com

It usually means one of two things:

Sometimes you may intentionally have multiple URLs that render the same or similar content. For example, if you have a product catalogue, your pages may be shown under several categories. Here we see three examples of URLs that might lead to the exact same content:

https://www.heavydutytarps.com/color/blue

https://www.heavydutytarps.com/18oz/blue

https://www.heavydutytarps.ca/color/blue

In the case of the first two URLs (which are for the same domain), you should designate one of these pages as the "master" page. Let's say the first link is designated as the master. In such as case, each of these pages should include a canonical link such as the following in the head section of the page:

<link rel="canonical" href="https://www.heavydutytarps.com/color/blue" />

So the master url's canonical link points to itself and the duplicate url's canonical link points to the master url.

The canonical link tells Google aand other search engines that the first url below should not be indexed since this content is substantially the same as the second url:

https://www.heavydutytarps.com/18oz/blue

https://www.heavydutytarps.com/color/blue

In the case of the .com and .ca links (assuming these domains have the same owner and show similar content), we want to tell Google that these pages are used to serve a different locale or language. This is done using tags similar to the following:

<link rel="alternate" href="https://www.heavydutytarps.ca/color/blue" hreflang="en-ca" />

<link rel="alternate" href="https://www.heavydutytarps.com/color/blue" hreflang="en-us" />

<link rel="alternate" href="https://www.heavydutytarps.com/color/blue" hreflang="en" />

The same alternate links should be rendered for both the .ca and .com pages in this example.

If you are upgrading or changing your site, it is a good idea to use Xenu to generate an XML sitemap for your current (live) site while also using it to generate an XML sitemap for your new site. By comparing these two sitemaps you can identify any pages whose address is no longer served by your site. For all such pages you should define rules to redirect the old URLs to the most suitable URL within your new site. If you are using an Apache web server, these redirects would normally be placed in a file named .htaccess within your site's root folder. These redirects will generally take the form:

Redirect 301 "/old-query-string" "/new-query-string"

After your site is launched, and your redirects are in place, you can use the Google command:

site:yourdomain.com

To get a list of all indexed pages on your site. These indexes may point to pages that no longer exist on your upgraded site. Click on these links to make sure the pages get redirected to the appropriate page in your upgraded site.

Every site should have a favourite icon, named favicon.ico, stored in the root folder of the site.

Most sites contain forms that can be used to send emails to the site owner. Make sure that the target email addresses are correct. Outbound email contains two important email addresses:

Email providers like gmail, outlook, yahoo, etc. perform a lot of checking to help prevent the dissemination of spam through their services. One common characteristic of spam is when the From email address is not consistent with the domain from which the email originated. For this reason, the from address should always be a valid email address defined under your primary domain. This same email address could be used as the From address for many different add-on domains under this domain.

The reply-to address should be the address that the receiver of the email will respond to when using the Reply option of their mail service. This can be any valid email address.

If your site allows users to upload attachments, it is important to understand where these attachments are stored and how they are named when they are uploaded to your server. If you use the names of the original files when saving the attachments, be sure to check what happens when two users upload attachments having the exact same names.

In general, after launching your site, make sure you test all of your site's forms that trigger emails to make sure they are functioning as expected.

If your site has been implement using a responsive design, you will want to check the behaviour of each page as you resize the browser window. Normally, your site's head section should also include the following metatag:

<meta name="viewport" content="width=device-width, initial-scale=1" />

In order to make it easier for visitors accessing your site from their Cell Phone to contact you, all telephone numbers should be implemented as tel anchors like so:

<a href="tel:+1-555-555-1212">(555) 555-1212</a>

This section describes things you should consider to help make your site load and render quickly.

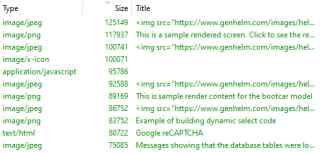

One of the most important factors that will influence the speed with which your site renders is the physical size of the images you are loading. Refer back to the technical section on using Xenu Link Sleuth. One of the great features of Xenu is that it provides a list of all content, including its type and size. If you sort this list by size in descending sequence you can easily identify the largest images on your site. These are the ones on which to focus your attention.

Generally speaking, you should avoid loading images that are larger than about 100K in size. This really depends on the number of images on your page. The more images that the page needs to load, the smaller you should try to make these images.

Since most sites serve a wide range of devices, ranging from cell phones to wide desktop monitors, you should consider using SRCSETs to allow the browser to choose the optimal image size to load for each device. Here is an example wherein we have created four different image sizes and provided "hints" to help the browser determine which image to load, based on the viewport (window) size the page is being loaded into.

<img src="https://www.genhelm.com/images/wysiwyg-editor-md.jpg"

srcset="https://www.genhelm.com/images/wysiwyg-editor-tiny.jpg 320w,

https://www.genhelm.com/images/wysiwyg-editor-xs.jpg 432w,

https://www.genhelm.com/images/wysiwyg-editor-sm.jpg 576w,

https://www.genhelm.com/images/wysiwyg-editor-md.jpg 768w"

sizes="(max-width: 980px) 100vw, 50vw"

width="768" style="max-width:768px;"

alt="Building websites using visual editors" class="w100m320" />

Often images can be compressed with little or no discernible loss in quality. Websites such as tinypng.com can be used to shrink your site's images to improve load time. GenHelm users can automatically call tinypng when generating images.

Historically, browsers would load all images referenced by a web page, even those that are "off screen", just in case the user scrolls to the portion of the page that contains the image. Lazy loading of images means delaying their loading until just before they come into view. Most browsers are now supporting lazy loading as a built-in feature simply activated by adding the property loading="lazy" to your image tags. The property is ignored by browsers that don't support this feature so you should get into the habit of adding this property to all images that are rendered "below the fold". That is, images that are generally off screen when the page is first loaded.

The number and size of external JavaScript and Stylesheets will influence your site's performance. Generally speaking, you should only add script and styles to external files if they are likely to be shared. If a script or style is only used for one page, it is better to just inline this content into the page in question. If you don't follow this approach, your external support files will gradually get bigger and bigger with each page you develop and much of the content in these files won't pertain the the page that they are being loaded from.

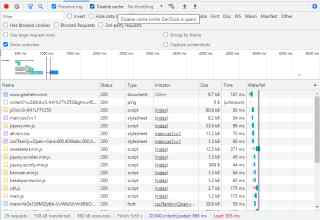

If possible, divide your script and styles into groups that are likely to be used together but avoid having so many separate support files that pages will need to load more that a few of these files. It is a good idea to inspect the main pages of your site using a web browser to see what each page is loading as we show here:

Notice, at the bottom if the screenshot above you can see the number of server requests needed to load the page. Also, pay attention to the size of the resources that your pages load.

All large JavaScript and CSS files should be minified (compressed). You can find lots of websites that will help with this. If you are using GenHelm, you can elect to create minified versions of the JavaScript and CSS files as part of the stow operation. Be careful not to "over minify" your JavaScript. Some tools can actually change the logic and break the code. Therefore, it is a good idea to test your script after it has been minified to make sure there are no side-effects to the minification.

Having a technically sound site that loads quickly won't help you very much if you don't have any visitors. This section discusses things you should review from an SEO perspective to help increase the traffic to your site. One thing to keep in mind, when it comes to SEO, is that there is no "silver bullet". The number one thing you can do to get traffic to your site is to develop content and/or services that people are interested in.

The most important aspect of every page, from an SEO standpoint, is its title. The title tells search engines what your page is all about. It is important not to spam your title by adding keywords that are not germane to the page content. Be sure to keep your page title to a maximum of about 60 characters. Some web developers append common information to every title tag, such as a company name. In many cases this is unnecessary since it is usually pretty easy to optimize your site for searches that include your company name. For example, if a user knows your company by name they are likely going to be able to find your site without much trouble. SEO should focus on getting traffic from visitors who may not be aware of your company (by name) but who are in need of the services you offer.

The URLs that you use to access your site's pages play an important role in SEO. Page names should be a combination of keywords separated by dashes. For large sites, you can group pages under folder names as in [domain]/main-folder/sub-folder/page-name. If possible, avoid URLs that require a lot of parameters in the querystring and try to keep page names as short as possible while including the most relevant keywords that are included within the content of the page.

Search engines factor in the names of images when indexing your site. Images should always be named using relevant keywords separated by dashes (not underscores). Don't include keywords that are not related to the image since this could result in spamming penalties.

In addition to making sure your images have meaningful names, be sure to add descriptive alt tags and titles for all of your images. The Xenu Link Sleuth output shows your image alt tags under the title column so it is easy to check these.

Pages that contain spelling and grammatical errors might be considered lower quality by search engines, which will hurt their ranking. Here is a good site that you can use to check your site's spelling.

Search engines expect your site to have a privacy policy which describes how you deal with data collected from or about your web visitors. If your site was built in GenHelm, you can use this default privacy policy that is defined within system so that it can be shared by any number of sites.

In the technical quality section, we described the importance of XML sitemaps. These are used internally by search engines. Additionally, you should have a site map page that can be accessed from all of your other pages. This will not only help your users find pages that they may be interested in, but this page will also provide internal links which also improve your site's ranking.

If your site is used to promote a local business, consider adding a local business schema. Here is an example of such a schema:

<script type="application/ld+json" id="localbusiness_script">

{"@context": "http://schema.org",

"@type": "Attorney",

"additionalType": "http://www.productontology.org/id/Personal_injury_lawyer",

"name": "Dietrich Law Office",

"legalName": "Dietrich Law Personal Injury and Disability Lawyers",

"url": "https://www.dietrichlaw.ca/",

"description": "Personal injury law and disability law.",

"logo": "https://www.dietrichlaw.ca/images/dietrich-logo-sm.jpg",

"sameAs": ["https://www.facebook.com/DietrichLawCanada","https://twitter.com/dietrich_law",

"https://www.youtube.com/user/dietrichlawoffice"],

"address": {

"@type": "PostalAddress",

"streetAddress": "141 Duke Street East",

"addressLocality": "Kitchener",

"addressRegion": "Ontario",

"postalCode": "N2H 1A6",

"addressCountry": "Canada"

},

"image": "https://www.dietrichlaw.ca/images/dietrich-law-building-tiny.jpg",

"telePhone": "519-749-0770",

"areaServed": ["Kitchener ON. Cambridge ON","Guelph ON","Waterloo ON","Brantford ON",

"Stratford ON","Woodstock ON","Weltliste ON","Ingersoll ON","Elora ON"],

"hasMap": "https://www.google.ca/maps/place/Dietrich+Law+Office/@43.4488666,-80.4838296,15z/data=!4m5!3m4!1s0x0:0xd1f410b7b7539006!8m2!3d43.4489054!4d-80.483795",

"geo": {

"@type": "GeoCoordinates",

"latitude": "43.4488666",

"longitude": "-80.4838296"

}

}

</script>

Every page on your site should have a unique meta description. If these are reasonably short and well-written, Google and other search engines will often use the descriptions you provide in their search results. Here is an example of a meta description.

<meta name="description" content="We make heavy duty custom tarps in all shapes and sizes." />

You can see that Google has used this information from the meta description, as well as some information from the page itself to build its description.

Notice that SERP results, shown above, include stars and a rating. If you sell a product or service that has positive user ratings, you can add metadata to your page which search engines may use to show the ratings and stars in your search results. Here is the data that was generated into the SERPs above:

<script type="application/ld+json" id="dollar_review_rating_HeavyDutyTarp_script">

{

"@context": "https://schema.org/",

"@type": "Product",

"name": "Heavy Duty Tarp",

"image": "https://www.heavydutytarps.ca/images/utility-trailer-tarp-md.jpg",

"brand": "Heavy Duty Tarps",

"aggregateRating": {

"@type": "AggregateRating",

"ratingValue": "4.9",

"ratingCount": "89" }

}

</script>

If you are using GenHelm, ratings schema like the one above can be created using the dollar function review_rating.